I have noticed a trend: design used to play a central role in shaping products, but now it seems that products are increasingly influenced by executives and project managers(using AI). At the same time, UX designers have become an obstacle for PM. UX Designers are constantly questioning whether users actually want or will use the proposed products. They seem to focus more on hiring managers who prioritize delivery rather than craft-based professionals.

Are we moving into an era of intelligence amplification — or cognitive collapse?

Innovation evolves, and challenges become more complex.

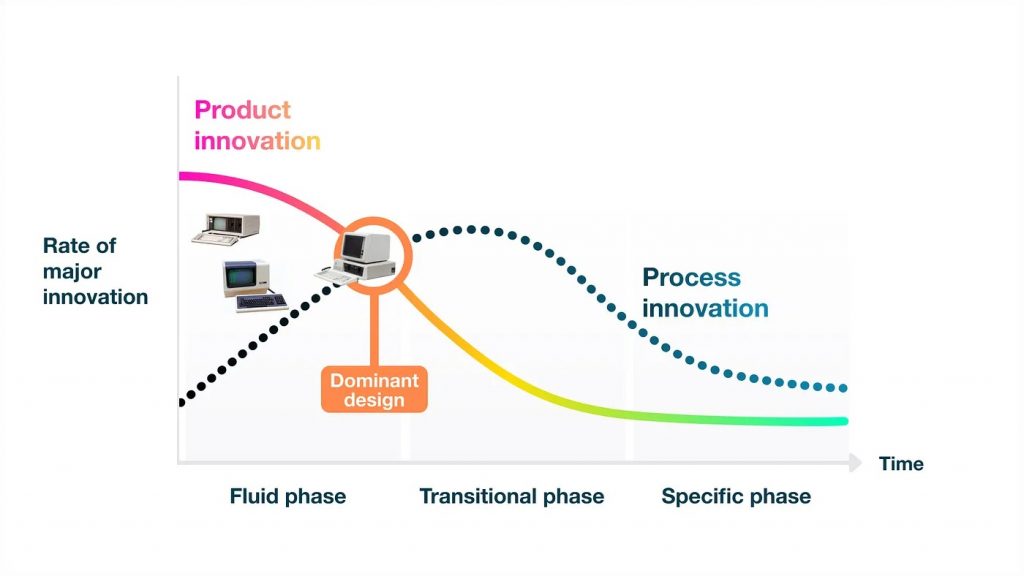

The process of innovation occurs in cycles, much like the changing seasons throughout the year. MIT professor James M. Utterback’s three phases — Fluid, Transitional, and Specific — illustrate how product design and processes typically develop over time.

According to Utterback’s model, the innovation cycle begins in the Fluid phase. This phase resembles early computer models and competing VHS standards, where the market is wide open, volatile, and filled with uncertainty.

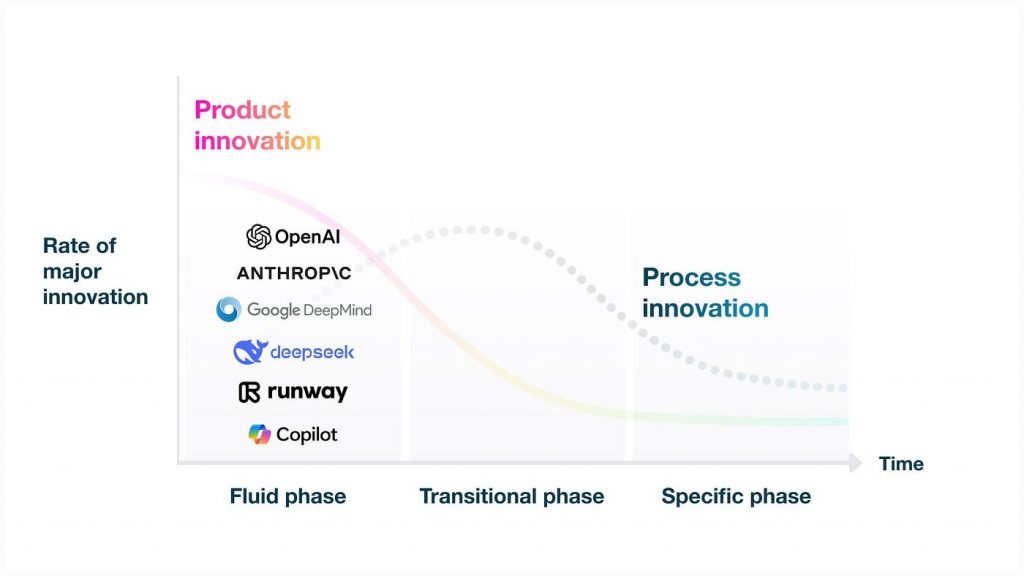

Innovation is now driven by AI. Leading players, such as OpenAI (ChatGPT), Anthropic (Claude), and Google DeepMind (Gemini), assert their presence.

Intelligence Amplification or Cognitive Bankruptcy?

I recently came across an insightful interpretation of AI known as intelligence amplification, as cited by Don Norman in his book Design for a Better World. Although the concepts of intelligence amplification were proposed by cybernetics and early computer pioneers in the 1950s and 1960s, they can help us reframe AI not as a replacement for human capabilities but as an augmentation of them.

On the other hand, cognitive bankruptcy—or cognitive debt—refers to the mental toll of relying too heavily on AI. As Michael F. Buckley mentions in his article about the effects of AI on the brain, “…instead of chemically altering our brains, we’re numbing them cognitively.”

There is evidence to support this view. Research shows that excessive reliance on large language models (LLMs), such as ChatGPT, affects our ability to remember or quote information. Instead of utilizing our own cognitive abilities, we tend to take a back seat and allow machines to take control.

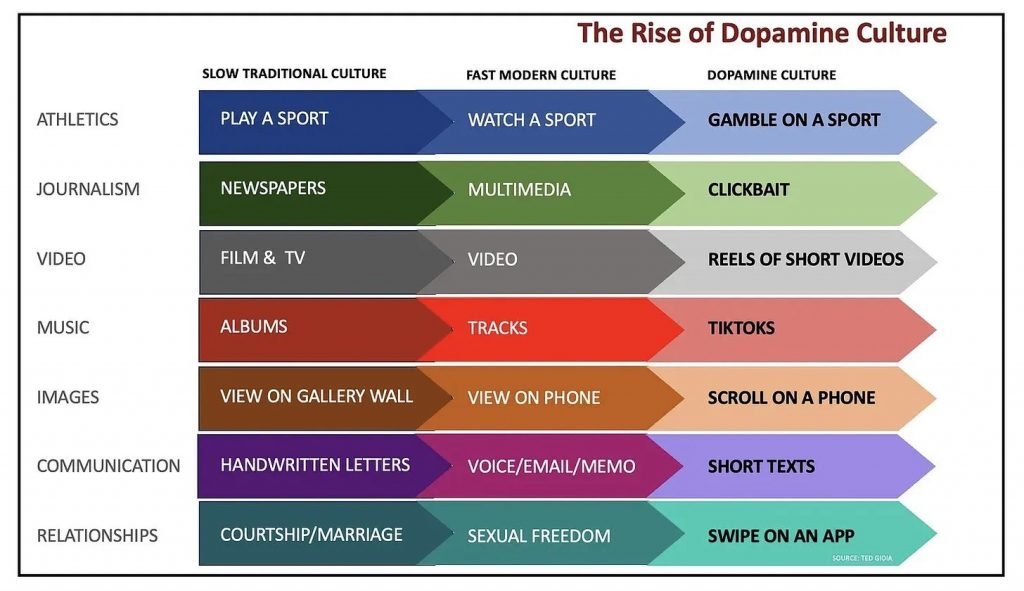

As Sam Matla points out, “We don’t go deep anymore. We don’t read. Our collective cognition is dominated by whatever the current trend is. We know more about the events of the last 24 hours than about the last 500 years, and it shows.”

The issue lies not only in the convenience provided by these technologies but also in our addiction to the instant gratification they offer. Getting quick answers and endlessly scrolling through reels may provide a temporary high but gradually deplete our mental resources.

To summarize these concepts:

- Intelligence Amplification: Deepens insight, enhances learning, and augments human creativity.

- Cognitive Bankruptcy: Bypasses critical thinking, weakens cognitive function, and increases dependency on technology.

Here’s how a poor use of AI can lead to cognitive bankruptcy:

Some organizations, like Klarna, famously shifted their customer service to AI, only to face significant backlash. In other cases, leaders are using automation to transform design from a scientifically-based practice into a purely craft-oriented one, often skipping essential phases like discovery.

While organizations strive to find the right balance with AI, removing the genuine human voice is a step toward cognitive bankruptcy.

Examples of scenarios that can lead to cognitive bankruptcy include:

- Automating customer service: Replacing human support with AI can erode brand trust and hinder the organization’s ability to effectively handle sensitive situations.

- Reducing headcount for AI gains: Cutting full-time staff or contractors in favor of AI tools eliminates the diverse thinking and human friction that foster culture and innovation.

- Oversimplifying design: Treating design as just a visual craft instead of a science causes teams to focus on features rather than insights, reinforcing a “we know what we know” mindset.

- Blind trust in system outputs: Overreliance on AI or automated suggestions increases the risk of accidents, errors, poor decisions, and other negative outcomes.

Will we use it to enhance our intelligence, or will it ultimately lead us to abandon our critical thinking altogether?