- Simulated attention does not equal real attention. AI attention maps are abstract simulations. They are trained on generalized models of visual salience rather than actual user behavior, intent, or familiarity. While they may be an interesting exercise with some applications in specific contexts, they should not be considered reliable evidence for user experience (UX), especially when used in isolation.

- A flawed assumption for a frequently used interface. Attention mapping, particularly automated AI versions, is best suited for simulating first impressions—what might catch your eye when you see something for the first time. However, a phone’s home screen is not a new interface; it’s one that users are deeply familiar with. People don’t “look” for their apps; they already know where they are located. Using a “first exposure” analysis on a muscle-memory interface misses the point.

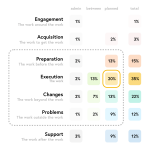

By conducting this analysis, the heatmap allows us to visualize the breakdown of gestalt principles in the full liquid glass mode. Understanding gestalt principles enables us to design visual hierarchies, influence the order in which users scan information, and prioritize content for quicker and easier retrieval.

We achieve this by leveraging factors such as shape, size, position, similarity, and contrast.

As the heatmap illustrates, if we make everything uniform and the same, we fail to utilize these principles effectively, leaving users without a clear starting point.

This lack of differentiation makes it more difficult to focus on specific elements, find necessary information, and increases visual workload. Over time, this added strain can lead to frustration and dissatisfaction.